role & responsibilities

product designer, user researcher, brand designer, front-end PM

Responsible for user research, design artifacts, branding, prototyping, executive presentations, internal and external tech demos, publication, mentoring (2 intern direct reports), workshop facilitation, hackathon facilitation, subject matter expertise in deep learning, human-in-the-loop machine learning

project duration: 2 years concurrent with other projects (50% time spent on this project)

background

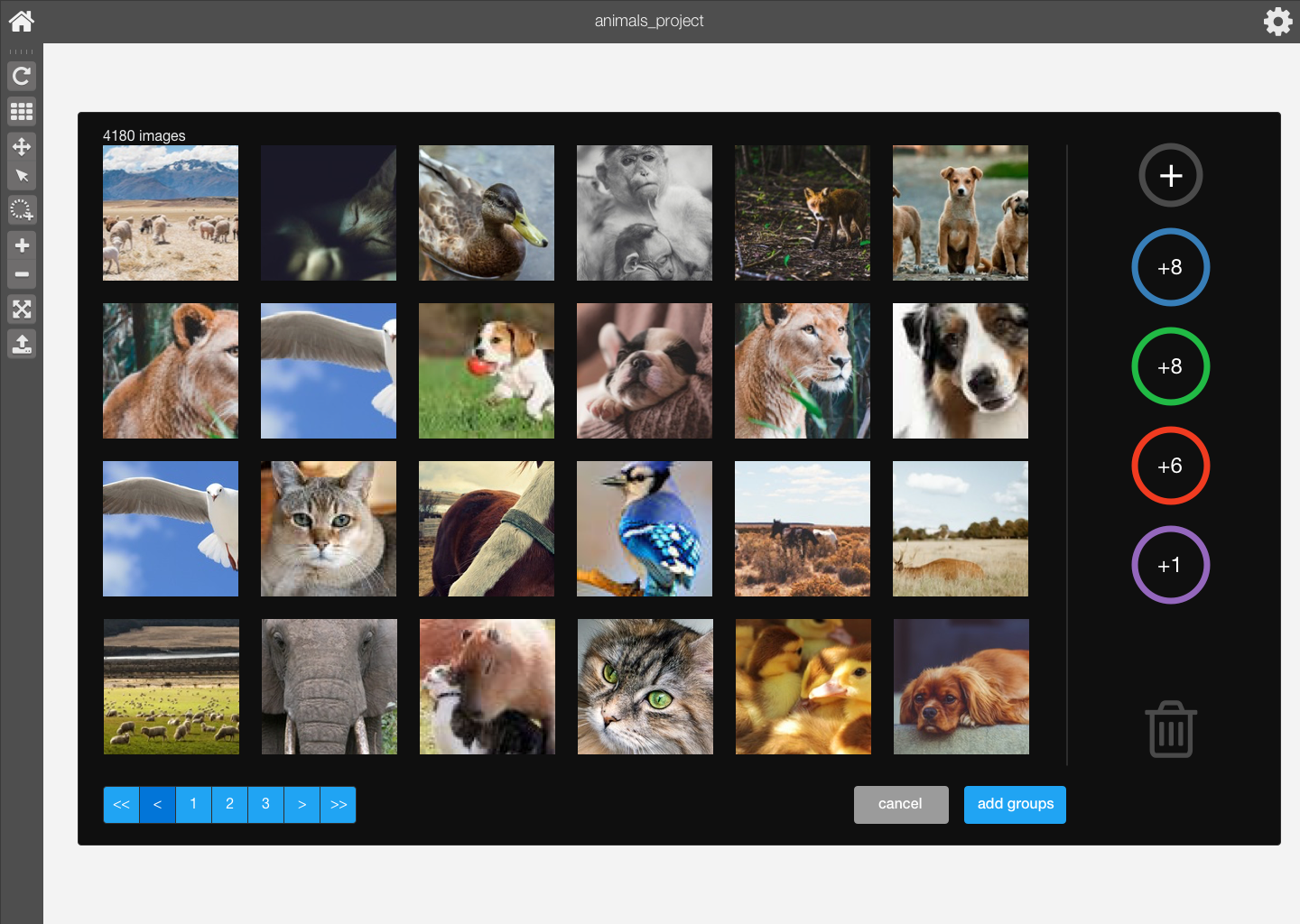

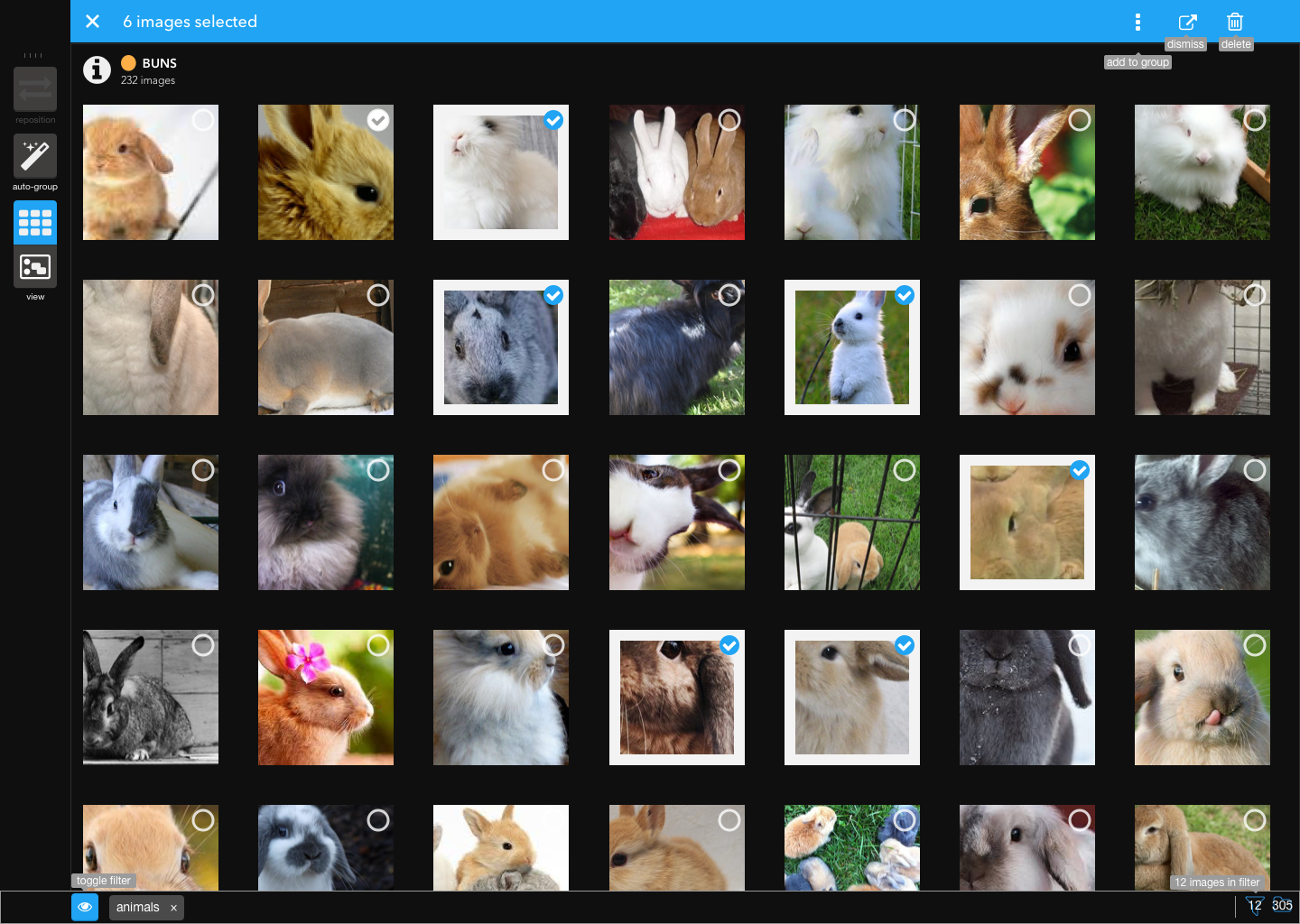

Sharkzor is a web-based application for visual sort and summary of large numbers (~100,000) of images. Sharkzor combines an intuitive user interface and a backend augmented with state of the art machine learning capabilities to provide a tool that allows an analyst to quickly triage and organize their large-scale dataset, and automate their specific analysis workflow.

Sharkzor has been demo'ed at venues such as NeurIPS 2017 and IUI 2018. The Sharkzor UX and Interactive Machine Learning Heuristics developed with the project have been published at IUI 2018, IEEE VIS 2018 Workshop on Machine Learning from User Interaction for Visualization and Analytics as well as at the Black in AI Workshop at NeurIPS 2018.

problem

Analysts need to quickly get a sense of both what exists, and what they may possibly be interested in, within datasets on the order of millions of images. The analyst the needs to find all images similar to those they find interesting, organize them into a hierarchy, and remove / discard images not relevant to the task. With one analyst and millions of images, doing this task manually is impossible.

hypotheses

h1

We can provide affordances for explicit and implicit interaction through a web-based interface that can be used to train a deep learning model to approximate the user's mental model.

proposed solution

Once the analyst has a general idea of the dataset and their goal, the deep learning features 'learn' the analyst's organization method and automate it for fast and efficient task completion.

requirements

NOTE: The client, users and use-cases are confidential and can not be discussed here

- web-based interface

- analyst interaction in browser with hundreds of thousands of images in real-time

- analyst interaction with deep-learning backend in a reasonable amount of time (ie. I set it to run and go get coffee, when I'm back it's done)

- classification accuracy of 99% for the analyst's defined task

process / prior art

My design process on the Sharkzor project started with literature and similar application review. I reviewed both existing research literature for image organization as well as applications for interactive deep learning. The initial Sharkzor research idea was an application called Active Canvas, developed internally at PNNL. We then built upon that prototype, pulling in relevant features and interaction paradigms to support the intended use-cases.

The attention to, and contribution to, current research in the field of interactive machine learning and explainable AI is an ongoing focus for the Sharkzor project.

process / task analysis

Based on requirements, prior art, and ethnographic observation, I developed and published a model training flow with 3 main phases; (1) triage, (2) organize and (3) automate. All of Sharkzor's affordances are then grouped into these phases, though each affordance is not necessarily exclusive to one task area. We used this strategy to roadmap features, assign resources, and think in an agile manner about each aspect of the product.

process / iteration & innovation

Sharkzor was initially designed (with browser limitations in mind) for use with only 500 images. In order to support the client requirement of one million images, there were heavy lifts for all of our teams; design, development, engineering and deep learning.

Design needed to come up with clever workarounds for issues (how can we interact in real time with a million images in the browser?), as well as understand the specific capabilities of the machine learning offerings in order to provide the most effective solution to the analyst.

Development needed to optimize and streamline front-end and back-end services to support real-time interaction capabilities and accurately capture and transmit the analyst's explicit and implicit interactions to the machine learning framework.

Deep learning needed to generate cutting edge models and frameworks that worked seamlessly with the web-based front end to provide a real-time interactive model training to the analyst.

Thinking creatively and collaboratively with the business, engineering, and data science teams, I was able to generate innovative solutions to difficult problems, with key features demonstrated below. Additionally our cutting-edge work was able to generate various publications including Interactive Machine Learning Heuristics.

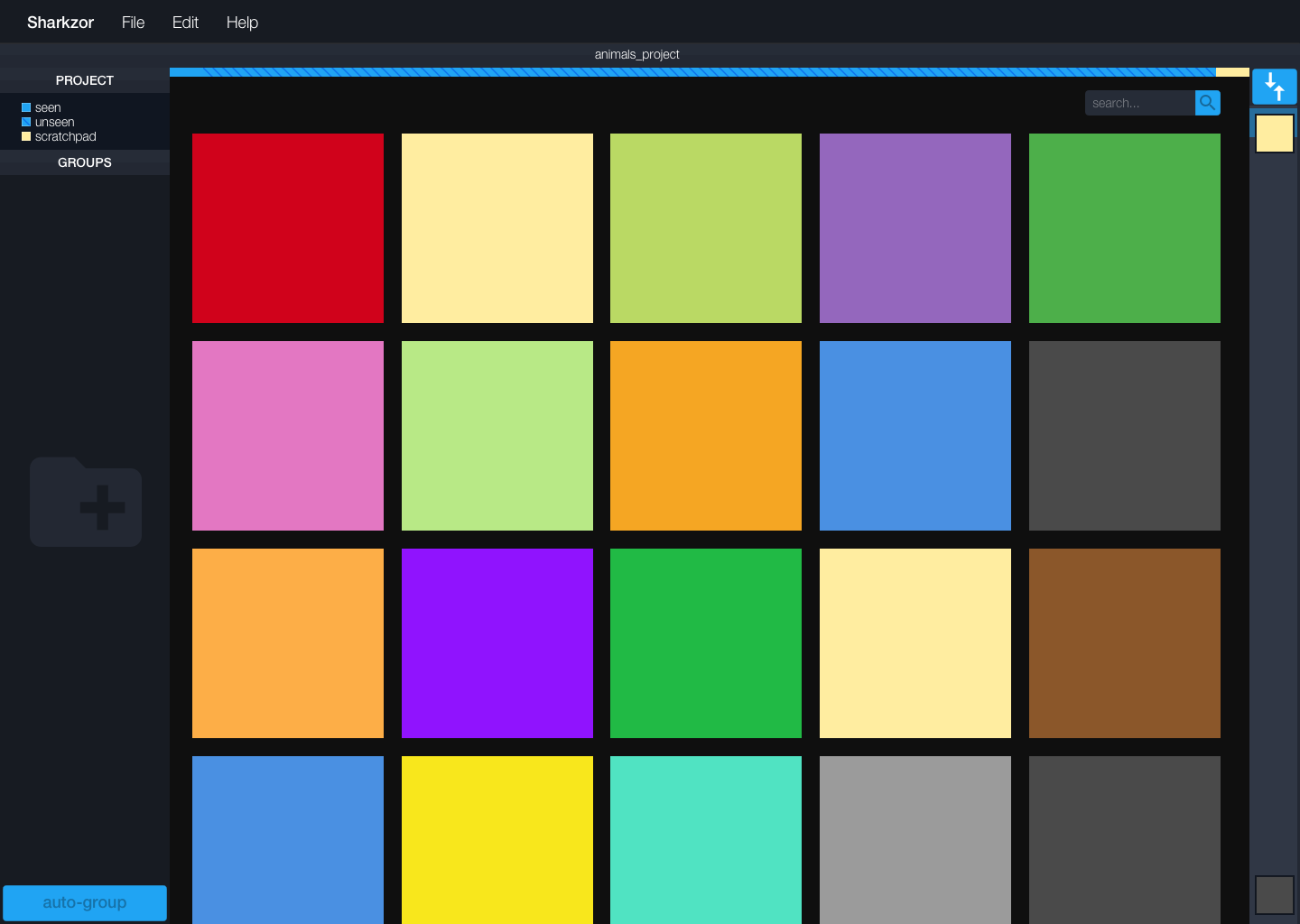

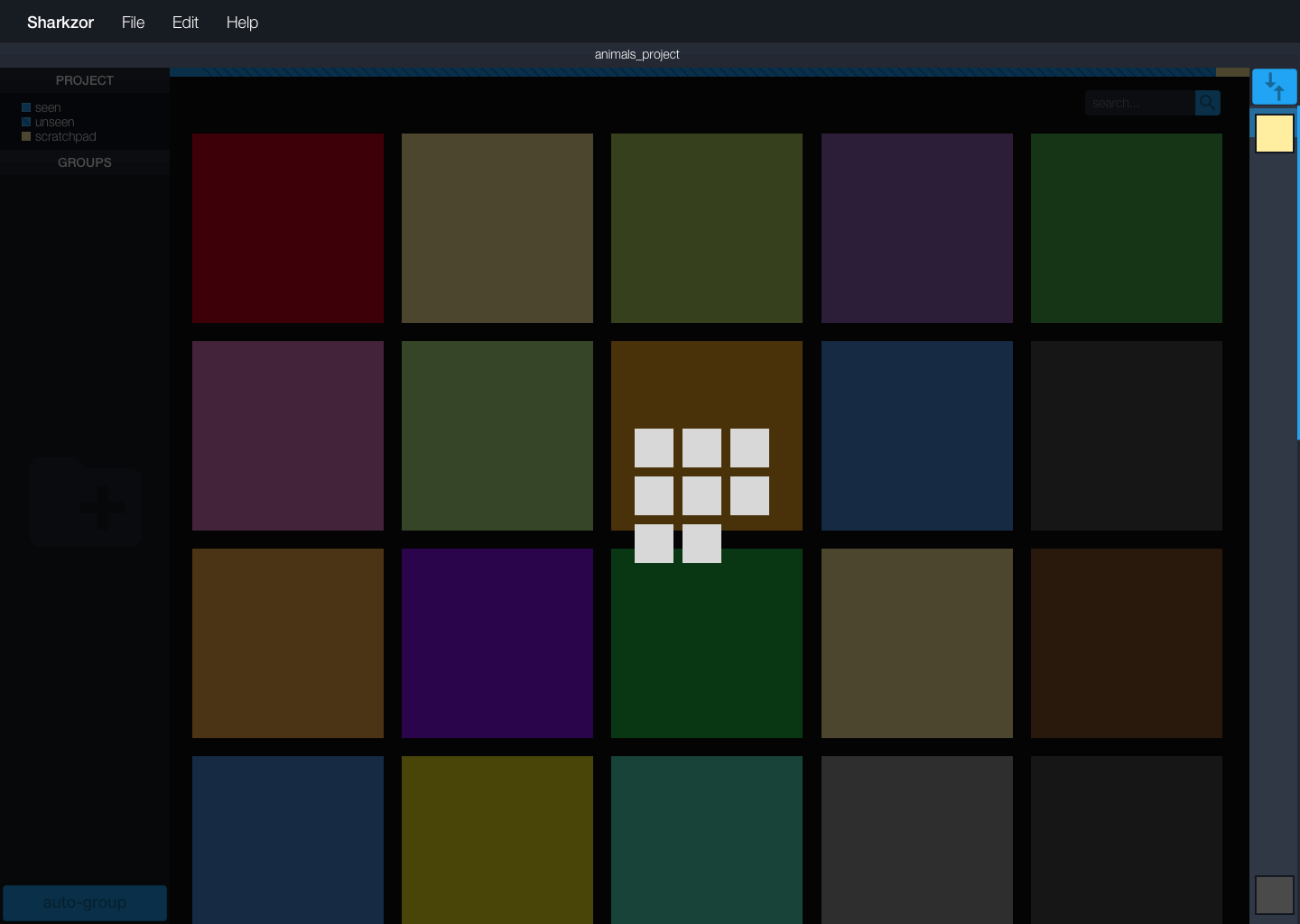

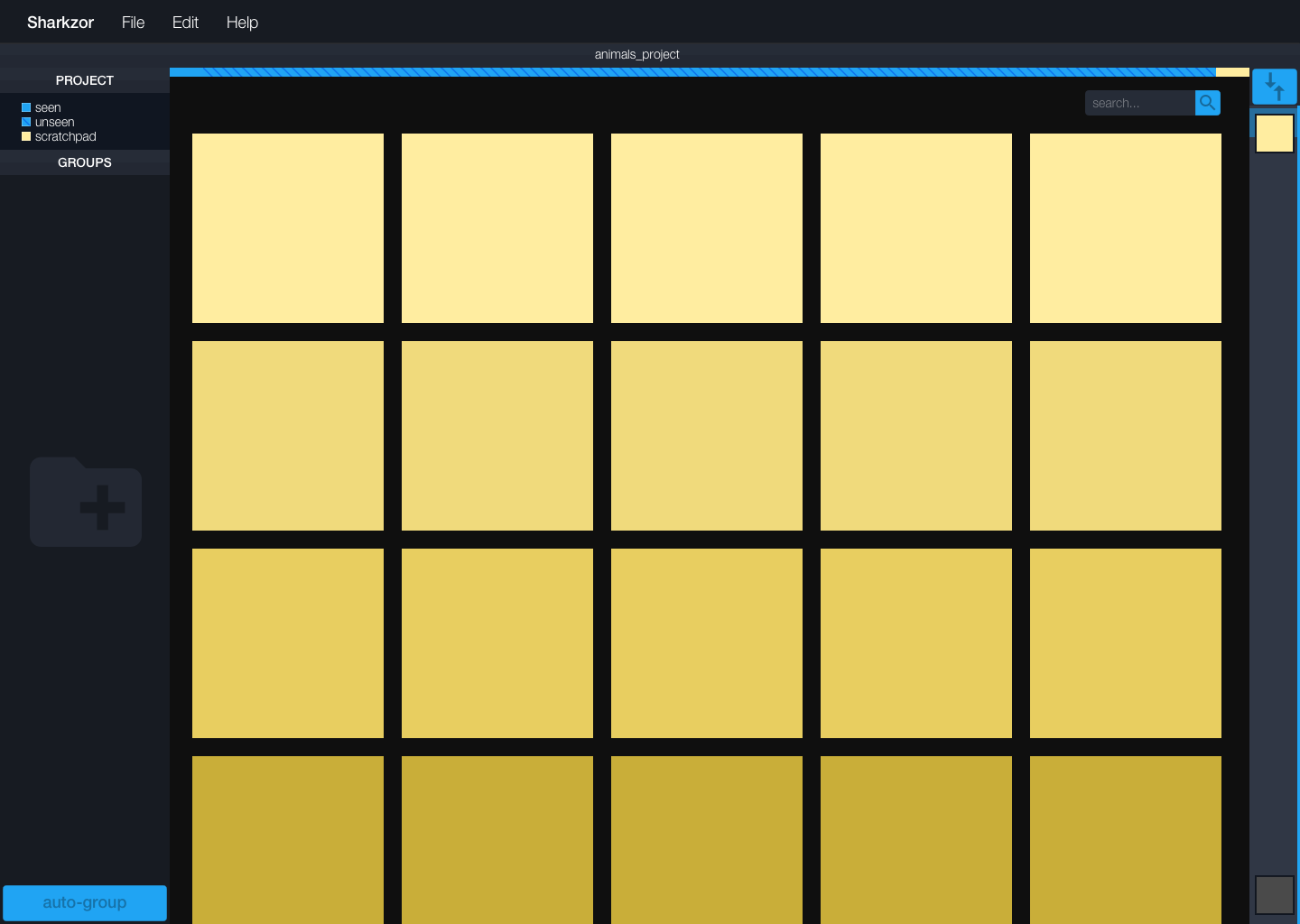

Intermediate high-fidelity mockup versions of the Sharkzor product are shown here. Each version shown below was the working solution for, on average, 8 months of the project.

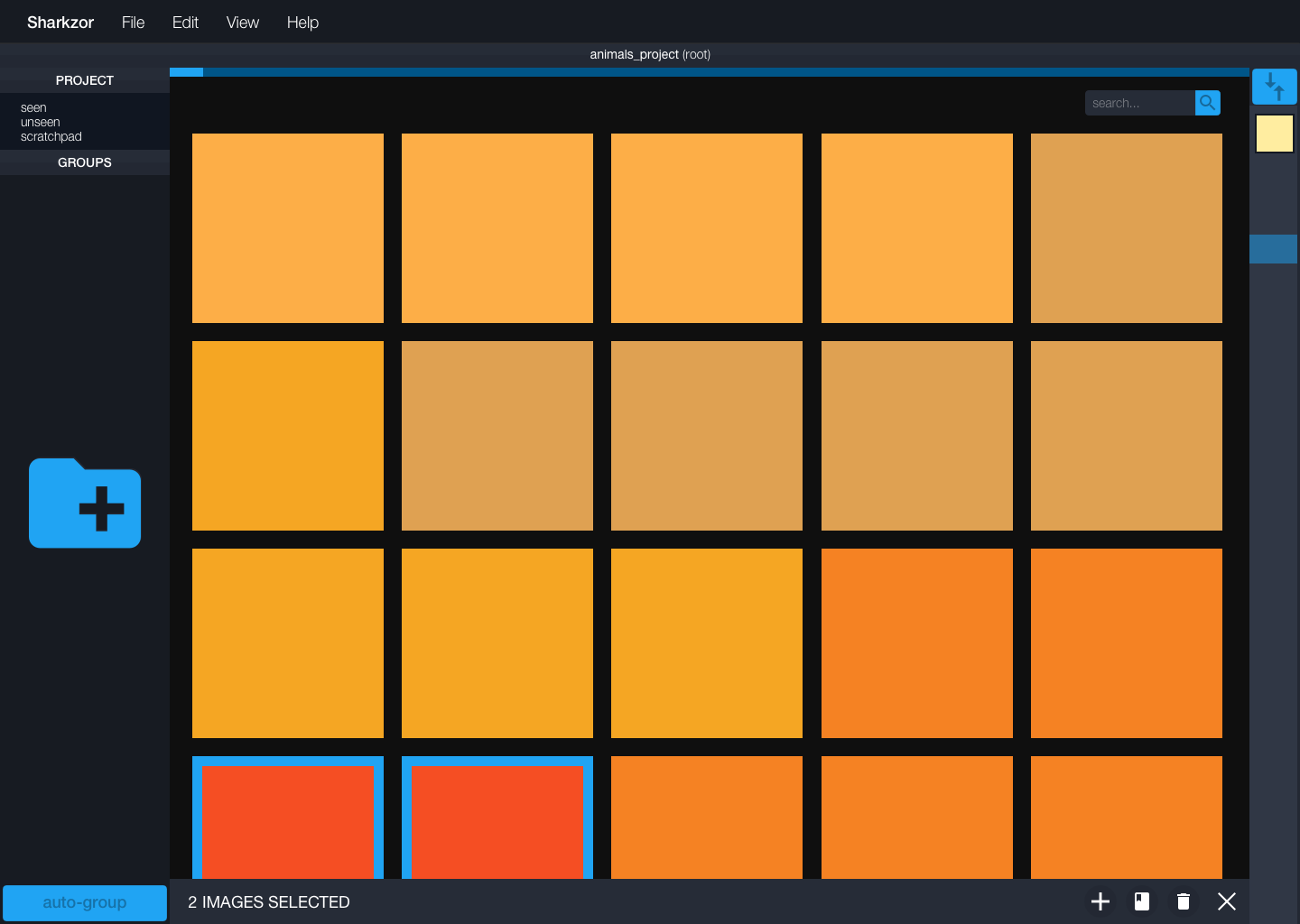

v1. everything is square

v2. welcome to the matrix

v3/4. streamlining interactions

v5. images forever

deliverable / high-fi mockups and prototype

I generated high fidelity mockups for the entire Sharkzor product as well as an interactive prototype. These artifacts were utilized when I presented at quarterly stakeholder presentations and monthly progress readouts. I also generated all demo scripts, promotional materials, brand assets and merch for the product.

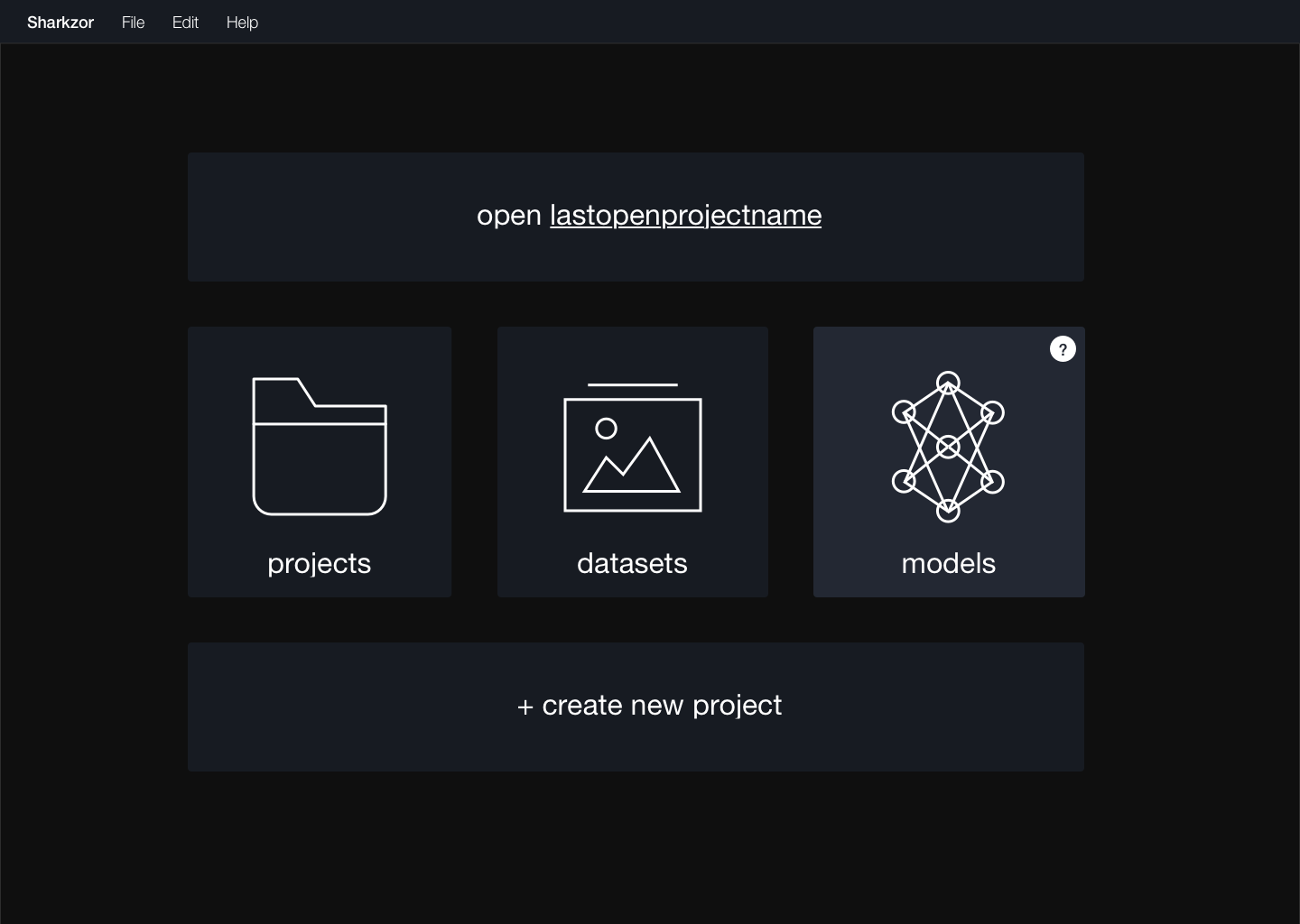

homescreen

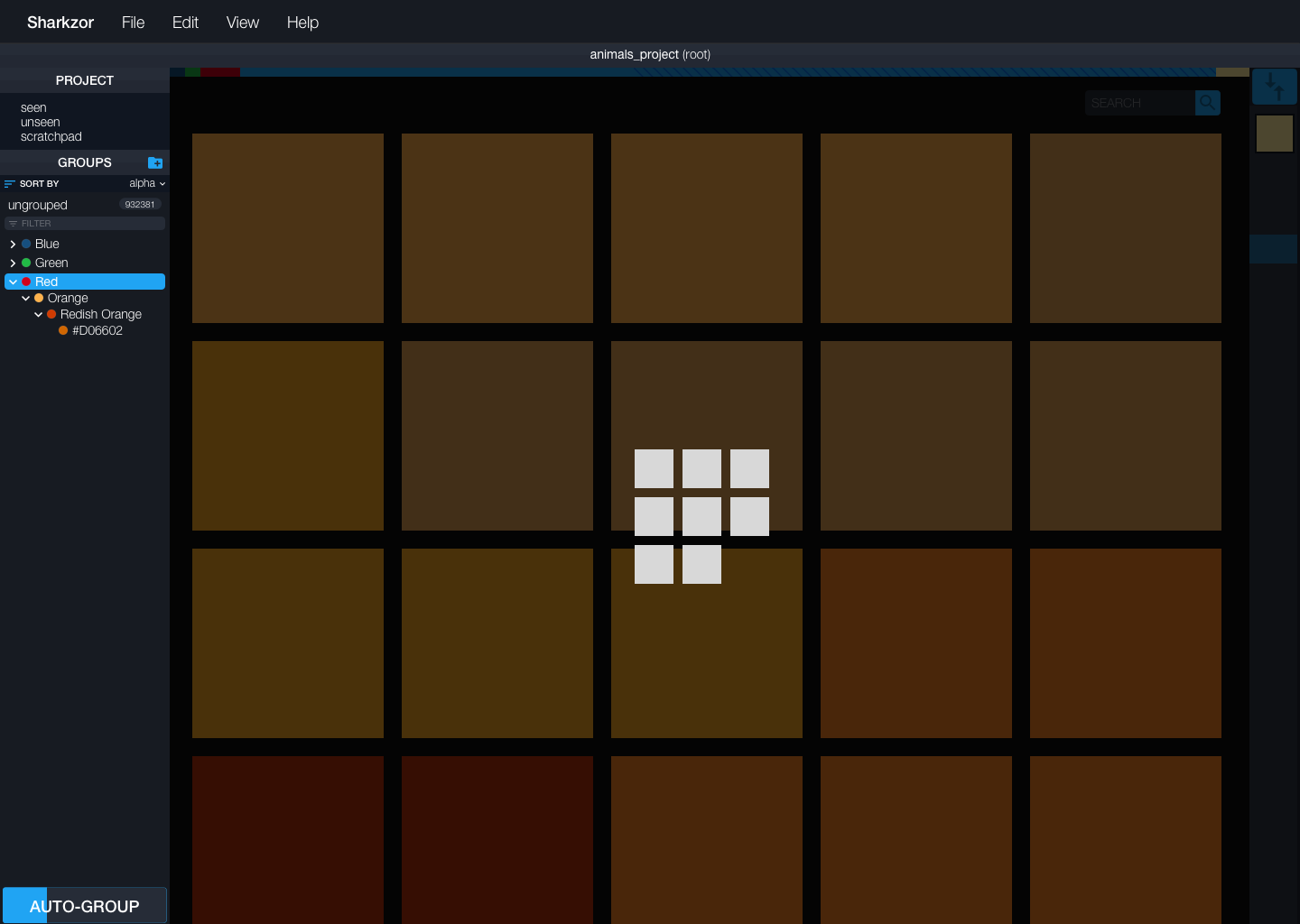

metadata view

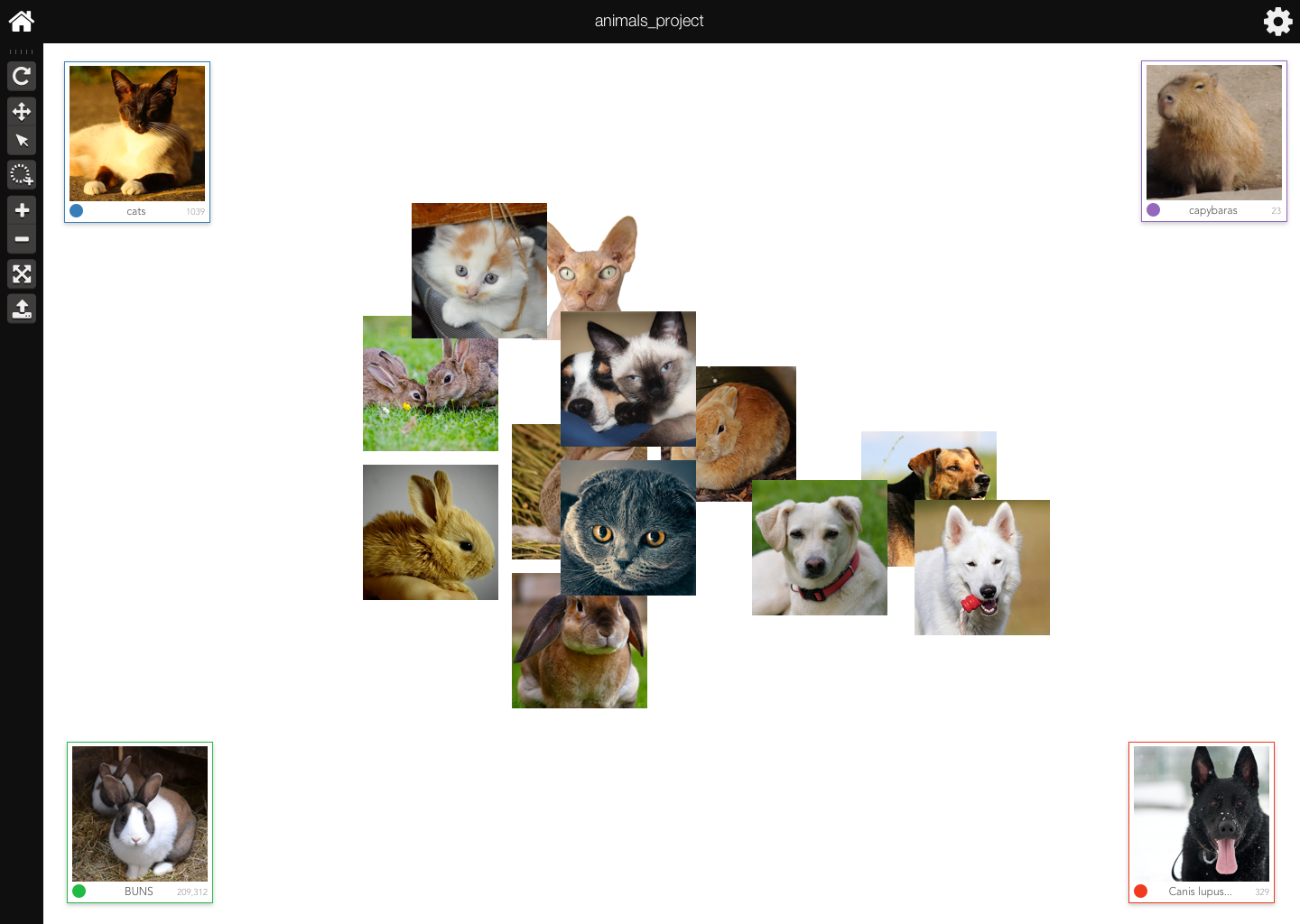

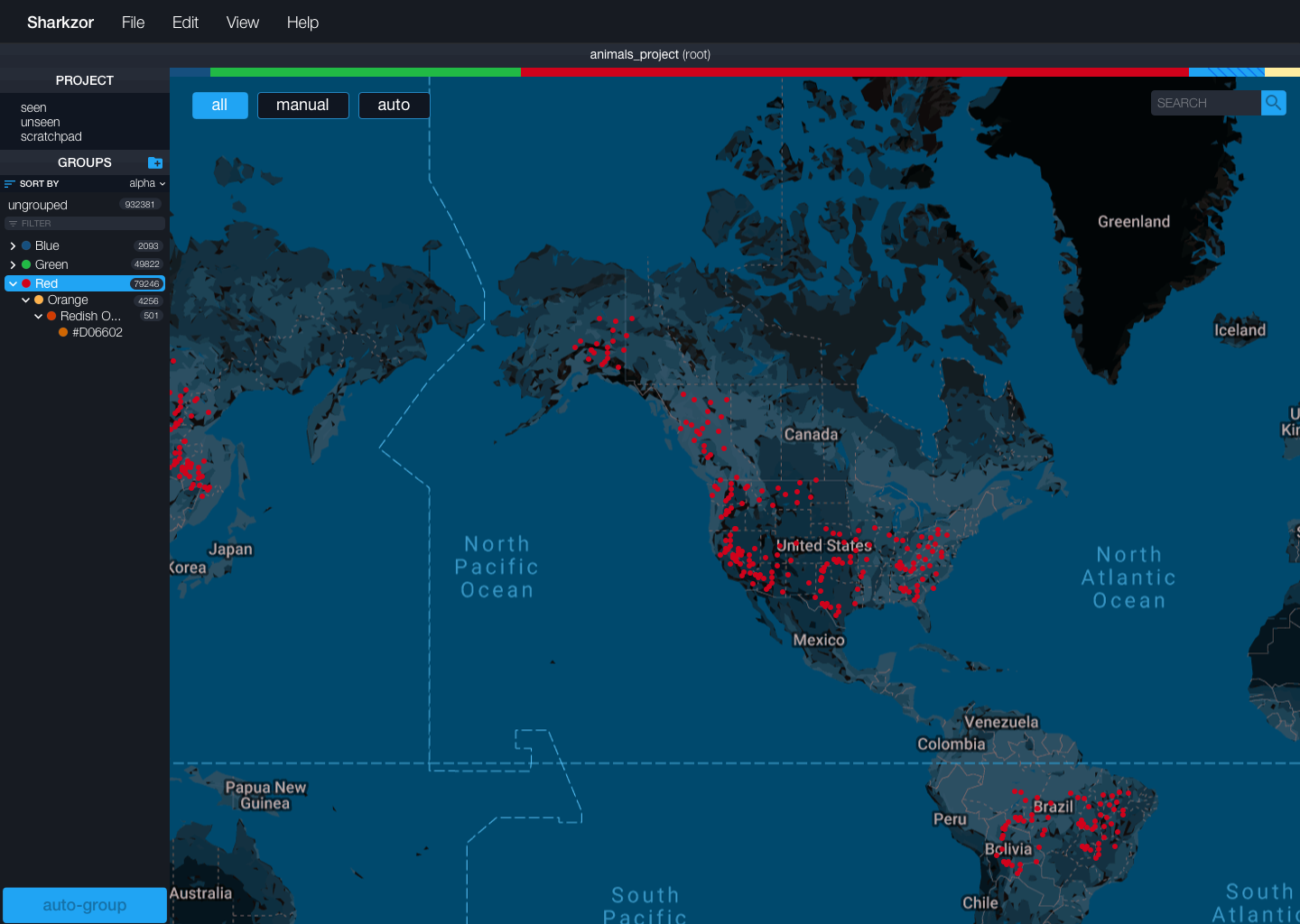

map view

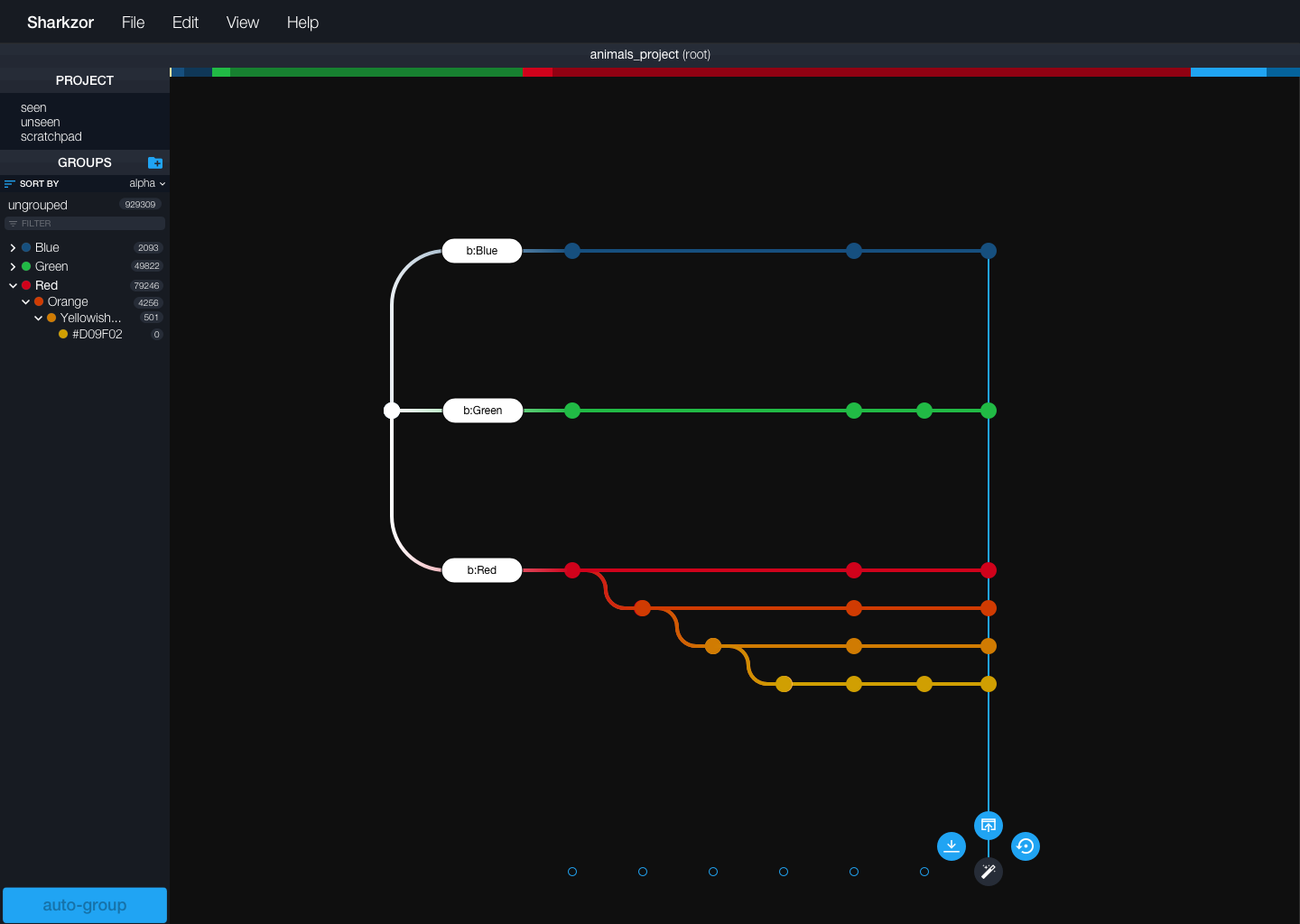

model history exploration

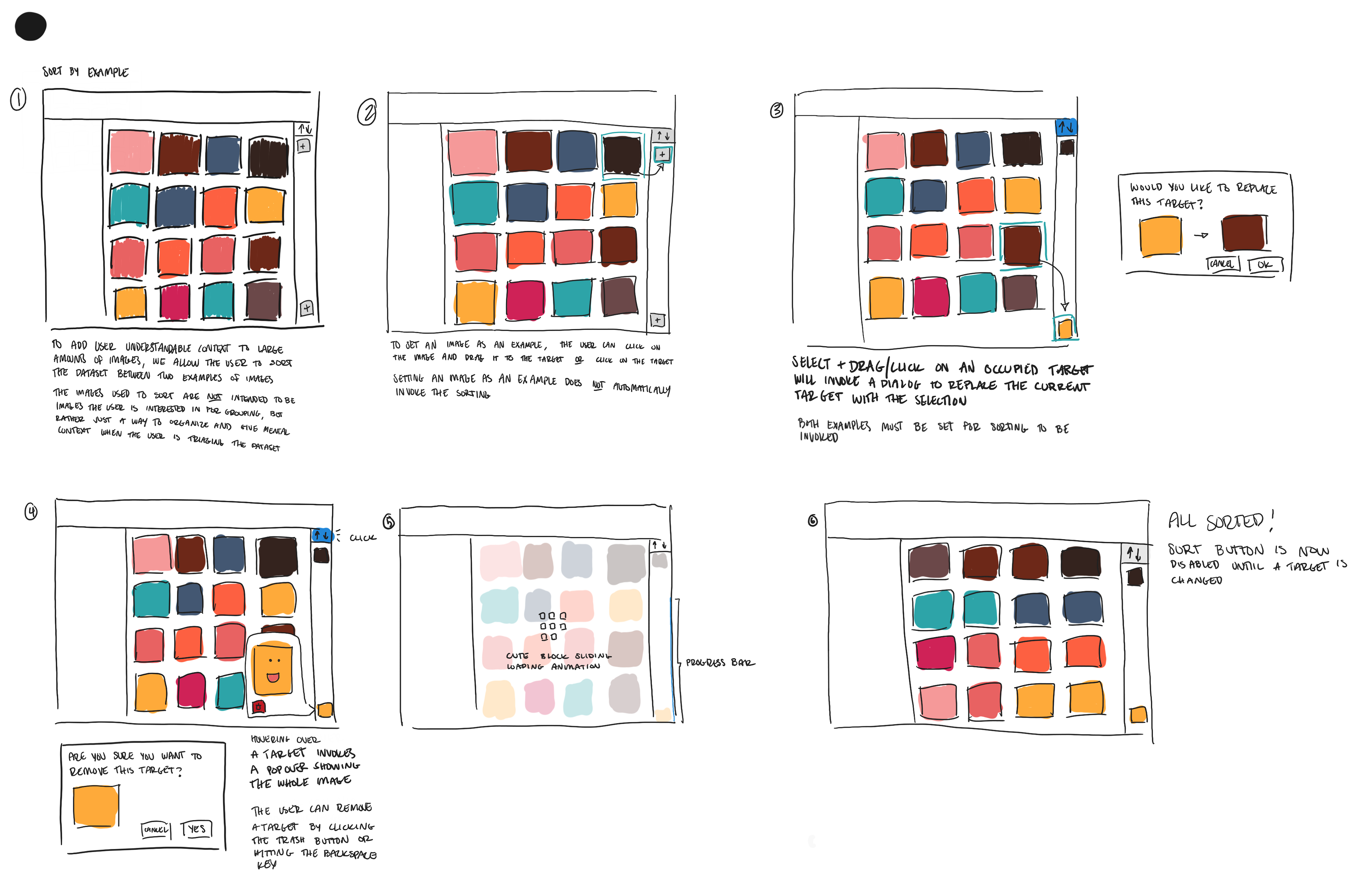

feature highlight / visual sort

To add user understandable context to large datasets during the triage phase, we allow the user to sort the dataset between one or two user-chosen example images. The target images used to sort are not intended to be images the user is interested in grouping, but rather a way to superficially organize the large number of images in a way that makes sense to the user during the triage task phase.

In the sketches and mockups below, we use target images of different colors. This particular example would sort the images between these two colors (in the mockups, light yellow to dark brown) adding a color spectrum context to the large dataset. Target images could be anything the user chooses; content shape, size, even background color.

process / heuristic eval

We were fortunate to work with Dr. Margaret Burnett who developed the GenderMag heuristic evaluation technique for identifying gender bias in software tools. The entire Sharkzor team (this means design, engineering, data science and business!) and members of PNNL's UX team participated in the evaluation. We identified and proposed solutions to each of our identified gender inclusiveness bugs. The GenderMag personas and foundational ideas are now an integral part of the team's design process.

process / usability assessment

The process of defining and validating our published Interactive Machine Learning Heuristics involved participants using the Sharkzor software in a usability study.

future directions

Sharkzor was initially developed with only image based datasets. One can imagine that Sharkzor could potentially also in the future be used with video or even audio based datasets. The features and innovative solutions described here scale to these other mediums. E.g. if we use the metadata based view for audio files we can still provide an intuitive way for analysts to triage and organize their dataset. We can refine our image lasso feature to predict trajectory of items in a video based on one user supplied instance. Designing forward thinking, robust features supported by realistic state of the art technology is an integral part of my design process.

Other future work for Sharkzor includes user studies (with surrogate users and tasks), further usability assessments and experiments using the interface to test Interactive Machine Learning Heuristics. IML Heuristics were developed on this project and presented at the IEEE VIS 2018 Workshop on Machine Learning from User Interaction for Visualization and Analytics as well as at the Black in AI Workshop at NeurIPS 2018.

This work was funded by the US Government.